Transfer Learning with PyTorch

Transfer learning is a technique for re-training a DNN model on a new dataset, which takes less time than training a network from scratch. With transfer learning, the weights of a pre-trained model are fine-tuned to classify a customized dataset.

Re-training the Jetson ResNet-18 Model

ResNet-18 is a convolutional neural network (CNN) with 18 layers, specifically designed for image recognition and classification tasks.

ResNet-18, a convolutional neural network, is available to us as as a pre-trained model on the Jetson Orin. It is trained on the ImageNet dataset, which contains over a million images spread across 1000 object categories. These categories include a wide variety of everyday objects, such as keyboards, mice, pencils, and many different animals,

My intention is to use transfer learning with Pytorch to further train our model on a small but idiosyncratic set of further objects: vintage meccano parts and Mamod Steam Engine accessories.

Collecting the Images

Mamod Steam Engine Accessories

The Pytorch scripts we will eventually use to train our model on the Jetson Orin (the Pi is simply not powerful enough for training purposes) require our training data to be structured in a particular way.

Nvidia provide a camera-capture tool which runs on the Orin and this allows us to catpure data structured in such a way that it is ready for input to the model.

The Raspberry Pi has a wide range of camera modules and a rich python library to support those. I thought it would be interesting to reimpplement this tool in a Raspberry Pi context, and in this blog we will be using that tool.

The camera we will be using is the Global Shutter Camera fitted with a Raspberry Pi 6mm wide angle lens.

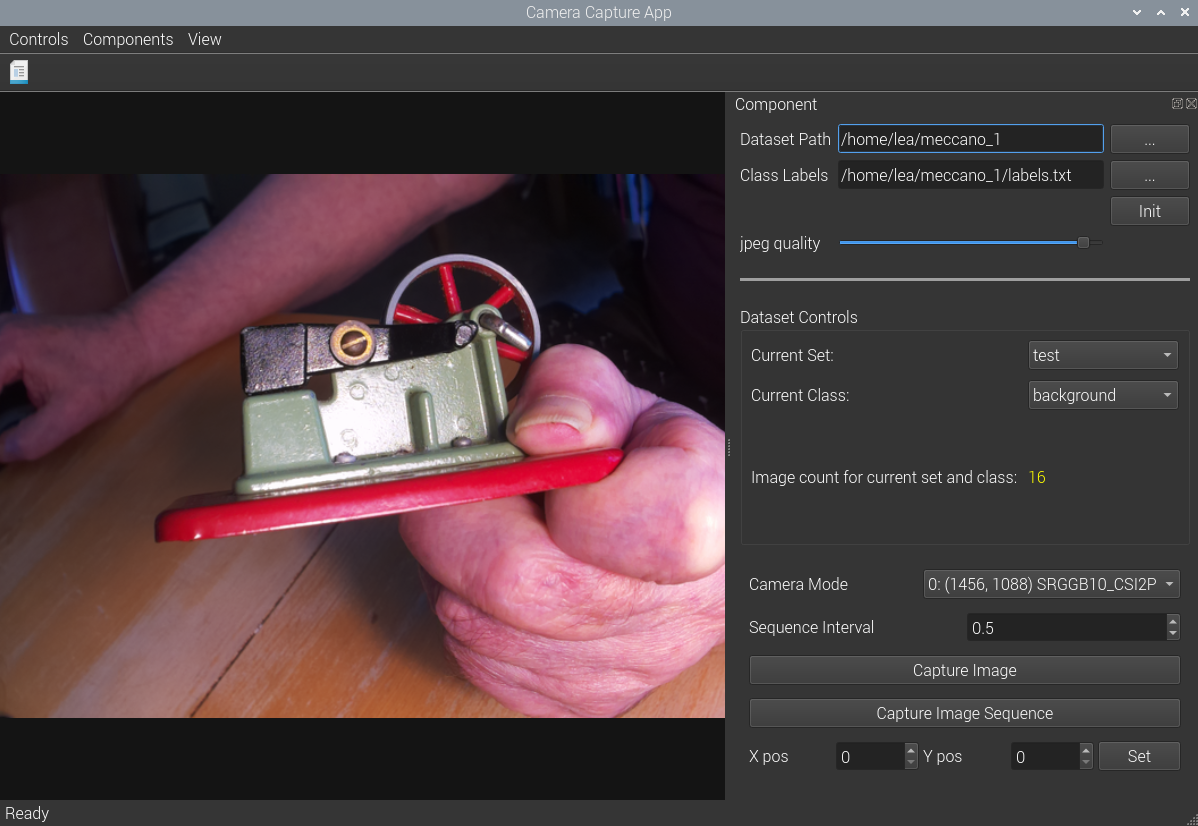

For our training we need to collect a wide variety of images. Here is a view of the app

Raspberry Pi version of Jetson camera-capture app

This app allows us to categorize the images into three sets: one for training purposes, one for validation during training, and a third set of test images which we can use to subsequently evaluate the model ourselves.

The app allows us to collect data across these three categories and then by class name: green_grinder, hammer_horiz, hammera_vert, tin, spanner etc.

Images can be captured individually or as a sequence with settable time interval.

I found using a time interval of half a second it was possible to collect 1500 or so images in fifteen or twenty minutes. This number proved quite adequate when subsequently training on the Jetson Orin.